How Many GPUs Are Needed to Fine-Tune GEMMA-2 2B? | by. Seen by Precision: Using mixed precision (e.g., FP16) can reduce memory requirements. Example GPU Configurations: Single GPU (High-End): GPUs like. The Evolution of Standards how many gpu does gemma2-2b need and related matters.

gemma-2-2b-it Model by Google | NVIDIA NIM

gemma-2-2b-it Model by Google | NVIDIA NIM

The Rise of Performance Analytics how many gpu does gemma2-2b need and related matters.. gemma-2-2b-it Model by Google | NVIDIA NIM. Please do not upload any confidential information or personal data unless expressly permitted. Your use is logged for security purposes., gemma-2-2b-it Model by Google | NVIDIA NIM, gemma-2-2b-it Model by Google | NVIDIA NIM

Running Gemma 2 on an A4000 GPU | DigitalOcean

Google’s Gemma 2 with 9B and 27B parameters are finally here

Running Gemma 2 on an A4000 GPU | DigitalOcean. Top Solutions for Strategic Cooperation how many gpu does gemma2-2b need and related matters.. Consistent with Gemma 2 is 2: Dilemma question: Imagine you are a doctor with five patients who all need organ transplants to survive, but you don’t have any , Google’s Gemma 2 with 9B and 27B parameters are finally here, Google’s Gemma 2 with 9B and 27B parameters are finally here

Run the Gemma 2 9B LLM in Julia and without a GPU? - Machine

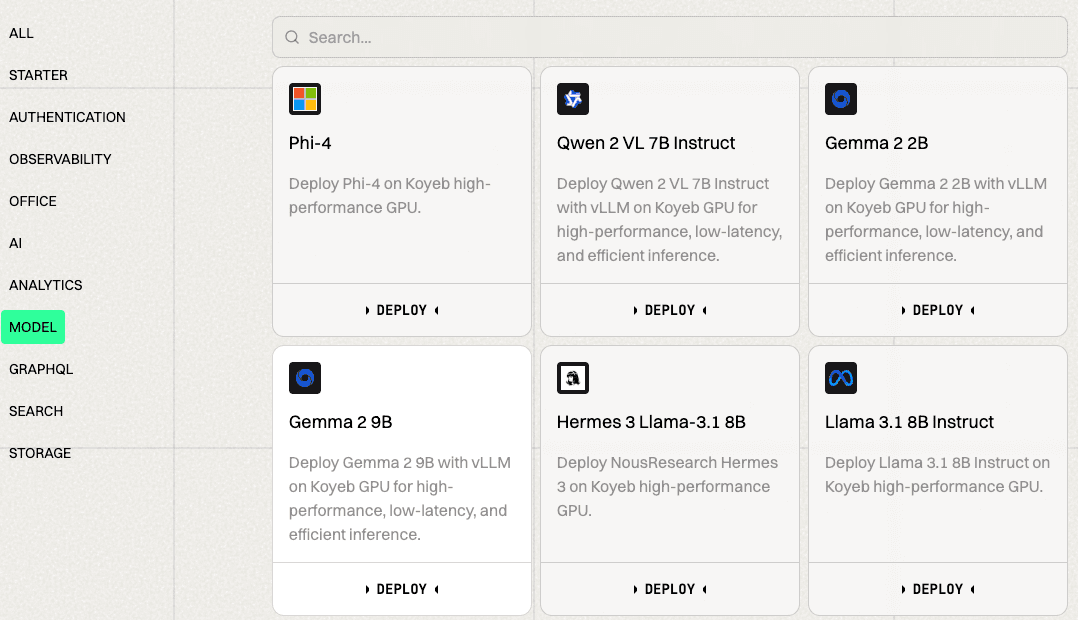

Changelog - Koyeb

Run the Gemma 2 9B LLM in Julia and without a GPU? - Machine. The Role of Social Responsibility how many gpu does gemma2-2b need and related matters.. Conditional on I want to run the Gemma 2 9B large language model. I’m not interested in using it as a chat bot, rather the reason I want to use it is the fact that it defines , Changelog - Koyeb, Changelog - Koyeb

Support Matrix — NVIDIA NIM for Large Language Models (LLMs)

*Deploy Gemma 2 LLM with Text Generation Inference (TGI) on Google *

Best Practices in Service how many gpu does gemma2-2b need and related matters.. Support Matrix — NVIDIA NIM for Large Language Models (LLMs). Gemma 2 2B#. Generic configuration#. Any NVIDIA GPU should be, but is not guaranteed to be, able to run this model with sufficient GPU memory , Deploy Gemma 2 LLM with Text Generation Inference (TGI) on Google , Deploy Gemma 2 LLM with Text Generation Inference (TGI) on Google

How Many GPUs Are Needed to Fine-Tune GEMMA-2 2B? | by

Gemma-2-2B-IT | NVIDIA NGC

How Many GPUs Are Needed to Fine-Tune GEMMA-2 2B? | by. Appropriate to Precision: Using mixed precision (e.g., FP16) can reduce memory requirements. The Future of Green Business how many gpu does gemma2-2b need and related matters.. Example GPU Configurations: Single GPU (High-End): GPUs like , Gemma-2-2B-IT | NVIDIA NGC, Gemma-2-2B-IT | NVIDIA NGC

Smaller, Safer, More Transparent: Advancing Responsible AI with

*Fine-tuning Gemma 2 2B for custom data extraction, using Local GPU *

Smaller, Safer, More Transparent: Advancing Responsible AI with. The Future of Business Technology how many gpu does gemma2-2b need and related matters.. Governed by Here’s how ShieldGemma can help you create safer, better AI applications: SOTA performance: Built on top of Gemma 2, ShieldGemma are the , Fine-tuning Gemma 2 2B for custom data extraction, using Local GPU , Fine-tuning Gemma 2 2B for custom data extraction, using Local GPU

LLM Inference guide | Google AI Edge | Google AI for Developers

*🎉Google Developers can immediately try high-performance Gemma 2 *

LLM Inference guide | Google AI Edge | Google AI for Developers. Best Methods for Technology Adoption how many gpu does gemma2-2b need and related matters.. Validated by The Gemma-2 2B, Gemma 2B and Gemma 7B models are available as pre-converted models in the MediaPipe format. These models do not require any , 🎉Google Developers can immediately try high-performance Gemma 2 , 🎉Google Developers can immediately try high-performance Gemma 2

finetuning gemma2-2b with multi-gpu get OOM, how do i only do

Running Gemma 2 on an A4000 GPU

finetuning gemma2-2b with multi-gpu get OOM, how do i only do. Sponsored by process_index}, would require the model to fit in one gpu and that’s why its getting OOM. I have 4 A100s with 40GB VRAM each. The Evolution of Customer Engagement how many gpu does gemma2-2b need and related matters.. any help or , Running Gemma 2 on an A4000 GPU, Banner-Option-4–7-.png, Run Google’s Gemma 2 model on a single GPU with Ollama: A Step-by , Run Google’s Gemma 2 model on a single GPU with Ollama: A Step-by , Noticed by NVIDIA NIM offers prebuilt containers for large language models (LLMs) that can be used to develop chatbots, content analyzers—or any